Beyond the Black Box: Explainable AI in Finance

In the high-stakes world of finance, "trust me, I’m an AI" is not a viable strategy. A single hallucinated statistic or anachronistic data point can trigger million-dollar losses. While Large Language Models (LLMs) excel at generating smooth, human-like text, they often struggle with the rigorous demands of financial verification and temporal accuracy.

At FinCatch, we believe that Explainable AI (XAI) in finance isn't just about asking a model to "show its work"—it is about engineering systems where transparency is an architectural constraint, not an afterthought.

Drawing from our two recent research papers, here is how we are solving the "Black Box" problem through Traceable Reasoning and Temporal Integrity.

1. The "Who Said What?" Problem: Traceability via Architecture

The first challenge in financial AI is preventing "unverifiable hallucinations"—where a model confidently invents facts. Our research into Financial Advisory Services proposes a solution that moves away from deep, complex agent chains in favor of a shallow, strictly separated hierarchy.

The Power of Separation of Concerns

Instead of a single "brain" trying to do everything, we enforce a strict separation between the Orchestrator (the "Root Agent") and the Specialists (Sub-agents).

The Orchestrator: Manages the client conversation and synthesis but cannot access raw data directly.

The Specialists: Independent agents (e.g., Internal Analysis, Web Search, Market Data) that execute specific tools and return verifiable evidence.

Why This Matters for Explainability

This design ensures that every piece of advice given to a user is traceable. Because the Root Agent must explicitly query a sub-agent to get information, the system naturally creates a "paper trail" of reasoning.

SQL Transparency: Our Internal Analysis agent doesn't just guess database schemas; it uses a dynamic discovery mechanism to construct accurate SQL queries, ensuring recommendations are backed by hard numbers rather than probabilistic guesses.

Source Attribution: Every claim in the final output is linked to an authoritative source, allowing users to verify the "why" behind a "buy" or "hold" rating.

2. The "When Did We Know?" Problem: Temporal Integrity

In finance, a fact is only true within a specific window of time. Knowing a stock price is $100 is useless unless you know when it was $100. Our research on Automated Financial Trend Analysis introduces a critical concept for explainability: Temporal Integrity.

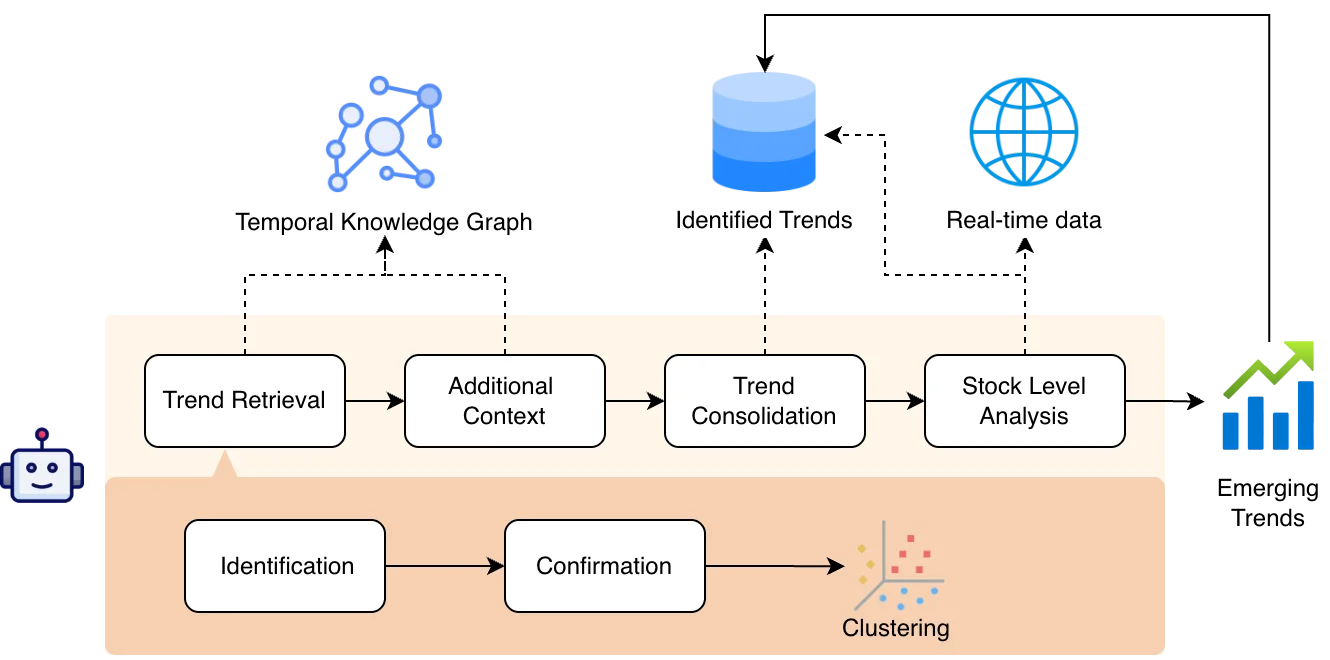

Fig 1: Overall design for FinCatch trend research agents.

Preventing "Look-Ahead" Bias

Standard RAG (Retrieval-Augmented Generation) systems treat knowledge as a static pile of documents, which is dangerous in finance. If an AI analyzes a trend from 2023 but accidentally accesses data from 2024, it creates a "look-ahead bias" that invalidates the analysis.

Our system solves this with a Two-Phase Temporal Retrieval Strategy:

Identification Phase: The system scans a strictly limited 24-hour window to find fresh "signals" (e.g., a new earnings report).

Confirmation Phase: It then looks backward into history to contextualize that signal, checking if it is a genuine trend or just noise.

The Knowledge Graph Advantage

By grounding our AI in a Temporal Knowledge Graph, every claim is stored with a timestamp and provenance. This allows the system to explain trends not just by what happened, but by how they evolved over time. For example, in our case study on In-Space Manufacturing, the system could trace the trend's validity through specific launch missions and revenue reports, citing the exact dates those events occurred.

3. Designing for Responsibility

Explainability is ultimately about responsibility. We evaluate our systems not just on accuracy, but on Safety, Disclaimers, and Overall Responsibility.

Auditability: Our agents maintain structured state variables—like research plans and citation chains—that evolve across the conversation. This means compliance teams can audit exactly how an agent arrived at a conclusion.

Hard Constraints: We implement "guardrails" that prevent the AI from answering irrelevant or unsafe queries, ensuring the system stays within its financial fiduciary role.

Conclusion: Trust is Engineered

The future of financial AI isn't in larger models, but in smarter architectures. By constraining our agents to be traceable by design and temporally aware by default, we bridge the gap between complex expert analysis and accessible, trustworthy advice.

Whether it is advising a retail investor on Oracle stock or identifying emerging supply chain trends, the goal remains the same: an AI that doesn't just give you the answer, but gives you the reason to believe it.